In this post we will look at the implementation of the SharePoint to Data Lake replication we discussed implementing in my previous post.

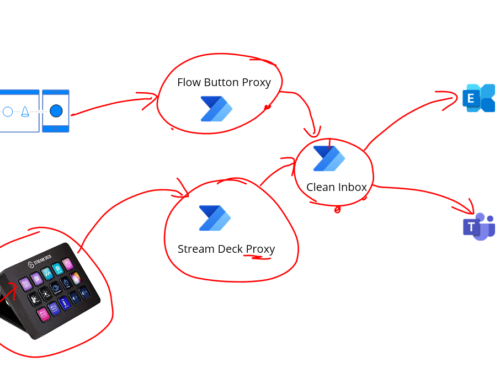

The step by step process flow for this is:

- The user uploads a file to the document library in SharePoint

- There is a logic app which listens to the file create event in the document library

- This Logic App will trigger within a couple of minutes of the file being uploaded

- The trigger Logic App will create a message about the file that was uploaded and will then call a child Logic App which we will refer to as the replication Logic App, this will do the replication work

- The replication Logic App receives a message with the site and location of the file that was uploaded

- The replication Logic App then reads the file content

- The replication Logic App will then create a file in the Data Lake at the target path containing the content of the file

- The replication Logic App will then delete the file from SharePoint

- The act of creating the file in the Data Lake will trigger a storage event which has been configured for the storage account to send storage events to an Event Grid topic

- The Synapse Pipeline is configured with a trigger using the Event Grid topic which will trigger the pipeline with info about the file that is created

- The Synapse Pipeline will then process the file

The below diagram shows what this looks like in more detail in the previous post.

Design Considerations

There are a couple of design choices we made:

- Separation of trigger and processing logic

- The Logic App trigger for SharePoint is tied to a single document Library in a single site. We felt that we may want to add other trigger Logic Apps potentially for other sites so we created this separation to make it easier to add more trigger Logic Apps but the bulk of the work is in the processing logic

- Networking

- Our data lake is connected to a private endpoint in our Data Platform. If your using an ISE environment or Logic App standard then you will have connectivity to a network so you can create a line of sight

- If your using a consumption Logic App without network line of sight then you may have to alter your storage firewall or alternatively workaround the limitation by going via the data gateway server which you can use with Logic Apps

- Data Lake Connector and Authentication

- You have a few choices with managed identity, service principal, access key and if you use the storage connector or an HTTP call.

- We could use the Data Lake Gen 2 via HTTP but it is 3 calls (Create File, Put Data, Flush) rather than 1 call. There is no connector for Gen 2 Data Lake

- We decided to use the storage API directly with a Service Principal because it was inline with how we were doing a few other things. With normal Visual Studio there is a couple of unhelpful designer things with user assigned managed identity and we didn’t want to setup a new system assigned identity so we used our existing service principal we had for another Data Lake scenario and we used the blob storage API directly rather than the connector. Different projects may have different preferences here.

- SharePoint Connector

- For the SharePoint connector we have tried to keep it simple and just use a single API Connection for SharePoint. This will be configured with our non-personal service account and it will have read/write and delete permission on the SharePoint document library we want to monitor for files being uploaded to.

Implementation

Lets look how we can implement the replication process.

Trigger Logic App

First off we have the trigger Logic App. This uses the sharepoint trigger on file created. We then have a condition where we check if it was a file or folder that was created. If it’s a folder then we just ignore it and terminate the logic app with a success status because we don’t care about the folder getting created.

If it’s a file then we create a json message to pass to the child Logic App that will do the work. We are passing in the full path and name for the file and the site the file is in.

The below image shows the simple trigger logic app.

As I mentioned earlier the trigger we are trying to keep simple so if we want to add another trigger for a different SharePoint site or Document Library we can just clone this one and change the parameters.

Replicate file to Data Lake Logic App

Next up we have the Logic App which does most of the work, as you saw above this gets called by the Trigger Logic App

When the Logic App starts we are passing some info about the file that was created:

- Full Path

- Name

- Site

We then are using the Get file metadata using path so we can get the info about the file if we need it for processing. You might do things like send notifications to users or monitoring tools about the files being transferred.

Next we will get the content of the file using the SharePoint connector.

We will then call Azure AD to get a token for our Service Principal.

We then use the HTTP connector to call the blob API to create the file.

If we take a look at the http action you can see we do a simple client_credentials request to get a token. The main reason we did this was in our scenario the combination of the networking requirement and some challenges in using managed identity meant that this was the best approach.

For the Blob API you need the audience you are asking for a token for to be:

https://storage.azure.com/.default

Once we have the token we can now make the call to the blob API as shown below using the url in the format:

https://[DataLake-Name].blob.core.windows.net

We can pass in the SharePoint file name as the blob name and the file content from the SharePoint action.

One of the things we did do in terms of the path in the data lake is that we decided to have a folder called “SharePoint” in our landing area in the Data Lake, we then decided that all files coming in from SharePoint would live in a folder structure underneath that path. We would have a single folder under the SharePoint folder for each of the different SharePoint sites that we end up having trigger logic apps for and then within that folder we would just replicate the exact same folder path in Data Lake that the file was placed in within SharePoint.

Running the Logic Apps

Once we have deployed our Logic Apps we are now in a position where we can let users upload files to SharePoint. Within a couple of mins this will trigger the first Logic App which checks for changes in SharePoint. This then calls the child logic app which reads the file and copies the file to the Data Lake storage account.

When the file is written to the Data Lake then it will trigger the storage account events which will be subscribed to by the Synapse Pipeline and then the pipeline will process the file.

What might we revisit later

At some point we will be either migrating this solution to Logic App Standard or Consumption rather than ISE. At that point we will probably revisit how we do the networking so that we can handle the network connectivity to the Data Lake which will change when we move from ISE to Standard.

With Logic App Standard we can use VNet integration on the app or with consumption we could use the data gateway to access storage via the private endpoint.

At this point we will also revisit the authentication, Id have preferred to use one of our user assigned identities but I had a couple of issues getting that working as I mentioned earlier.

Constraints

There are a couple of constraints to be aware of here, mainly on the Logic App message size. Logic App connectors will only be able to handle messages of up to a certain size and in our case we are expecting users to be uploading fairly small data sets to SharePoint which then participate in our bigger data platform processing.

If our needs grow and we need to upload bigger data sets then you can hold larger files in SharePoint but we will look to do something with custom code or a more advanced Logic App/Pipeline approach to handle chunking in the file replication process which will allow the replication of bigger files.