Recently ive been experimenting with Synapse and wanted to look at CosmosDB Synapse Link. The usecase im experimenting with is the idea that I have messages from a system being processed through my integration platform and I want to be able to integrate them into my analytics solution so that we can do data integration scenarios from synapse and also Power BI analysis. In this post I am investigating how to setup and use Synapse Link with Cosmos DB and wanted to think about how it can help me.

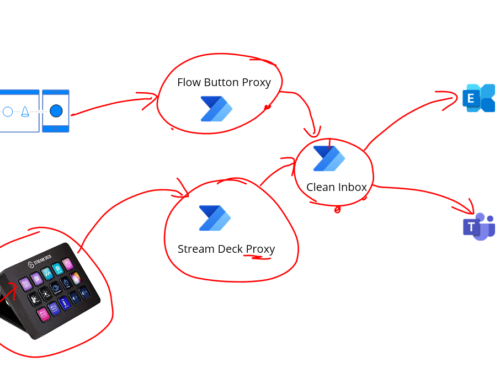

My starting point is a scenario where I have an integrated solution with events about a railcar which come into my integration platform and then we pub/sub them with service bus to other systems who are interested in being aware of the event. It looks a bit like below.

This is a typical pub/sub type pattern where the receiving Logic App is getting the message via some transport, it is transforming the data to a canonical format, maybe doing some enrichment then it publishes a message to service bus and multiple subscribers can get copies of the message and do whatever processing is required to get the data into the destination systems.

If I start to bring a persisted message store idea in the pattern with Cosmos then there is the question of if the Cosmos is used as an additional subscriber or is internally part of the process. In this case to keep it simple I might just archive message on the receiver Logic App. This means I can look them up later if I want to for any interface or I can also allow Synapse to Query them via Synapse Link. This would modify the diagram to look like this.

The following are my notes on setting it up and some thoughts on the way.

Understanding the architecture

There is a pretty good overview of the synapse link feature on this page: https://docs.microsoft.com/en-us/azure/cosmos-db/synapse-link

It talks about this diagram in particular.

The key thing in the diagram is that the synapse link feature adds the analytics store. This is then synchronized with the transactional store and it means that your operational workloads (my logic apps) running against the transaction store will not be affected by any big analytics jobs happening on the analytical store. The 2 stores are optimized for those different use cases.

The next attractive feature is that with Synapse Link I should be able to query my Cosmos DB and access near real-time data without the need to develop data flows or ELT processes to ingest data through numerous steps to get it to the point I can begin using it.

Those 2 in particular are very attractive for my scenario.

Setting up Cosmos

In Cosmos when you setup your account you need to ensure the Synapse Link feature is enabled.

The first thing I found was that the Synapse Link feature doesnt seem to be available on Serverless Cosmos DB. I had to use a provisioned one. I might be wrong here as I couldnt really find any documentation to confirm this either way so if someone can correct me please add a comment, but I setup a serverless Cosmos DB and the option didnt seem to be available.

When I did setup the Provisioned instance then there were 2 key steps.

- On the overview blade for your cosmos account turn on the synapse link feature (or i think you can also do it in the features blade)

- When you create a container you need to turn on the analytical store

3. If you want to modify the analytical store time to live for objects then you can do it in the settings for the collection

Loading some test data to Cosmos

Next I created a logic app with a loop in it to go and create a bunch of documents in the collection. I can create a message and then just use the create or update document action as below.

I now have a bunch of items in the collection in Cosmos and I can see the json object which has some of my custom properties and some of the attributes that Cosmos needs.

Thats it for the Cosmos side of things, very easy.

Setup Synapse

Next up we will look to use Synapse Serverless SQL to query the Cosmos DB. There are a number of steps to doing this.

First off I need a credential to be able to store the key for Cosmos. I can create a database scoped credential for Cosmos and save the secret key from Cosmos.

CREATE DATABASE SCOPED CREDENTIAL Cosmos

WITH IDENTITY = 'SHARED ACCESS SIGNATURE',

SECRET = '[Read Only Key from Cosmos]';

I will then create a view of my records in Cosmos. You can see below we are using OPENROWSET with the cosmos provider and I am passing in my credential for Cosmos which we just created.

CREATE OR ALTER VIEW Cosmos_Loaded_Railcars

AS

SELECT *

FROM OPENROWSET(

PROVIDER = 'CosmosDB',

CONNECTION = 'Account=[Cosmos-DBAccount];Database=[CosmosDB-Database]',

OBJECT = '[CosmosDB-Collection]',

CREDENTIAL = 'Cosmos'

)

WITH

(

DocumentID varchar(max) '$.id',

LOAD_SLIP_ID varchar(max) '$.LOAD_SLIP_ID',

RAIL_CAR_ID varchar(max) '$.RAIL_CAR_ID',

LOAD_COMPLETE_DATE_TIME varchar(max) '$.LOAD_COMPLETE_DATE_TIME',

PRODUCTION_DATE varchar(max) '$.PRODUCTION_DATE',

NET_WEIGHT_LBS varchar(max) '$.NET_WEIGHT_LBS',

TRANSACTION_DATE_TIME varchar(max) '$.TRANSACTION_DATE_TIME'

)

As LoadedRailcars

In the query I am using json path on the collection so I can create columns for the view result set. This will give me a nice SQL like view for my analytics.

If I want to query the view then I can just do a query like below from the view where I am using a date field to query the records in Cosmos which are greater than the date provided.

SELECT TOP (100) [DocumentID]

,[LOAD_SLIP_ID]

,[RAIL_CAR_ID]

,[LOAD_COMPLETE_DATE_TIME]

,[PRODUCTION_DATE]

,[NET_WEIGHT_LBS]

,[TRANSACTION_DATE_TIME]

FROM [dbo].[Cosmos_Loaded_Railcars]

WHERE [LOAD_COMPLETE_DATE_TIME] > '2021-08-04T13:53:57.00Z'I really like the simplicity of this approach. In less than an hour I have a view which queries my data in Cosmos and I can do some really powerful analytics and best of all there is no pipelines to have to manage and maintain.

Things id like to see in the future

There were a couple of things id like to see improved to help make this even better. The couple of thoughts that jump out are:

- Id like to be able to use the External Data Source for my Cosmos views. When you do OPENROWSET its handy that you can supply credentials as a reference, note that some samples show passing the key inline which is not a good idea. Id also like to be able to just setup an external data source for Cosmos and pass a reference to that so I just keep the Cosmos settings in 1 place if multiple views use it

- There is an option in synapse to add a linked resource where you can add a cosmos db. This seems to only help for data flow or sparks stuff. It doesnt seem to help you with Serverless SQL. Bit of a shame, feels like there is an opportunity to make things a bit easier there maybe.

- I could only get the query to work using the cosmos db key. I would like to be able to configure the managed identity for my synapse workspace to be able to access the Cosmos Account and then just reference the managed identity for my credential for cosmos which would save the need to have a key. I tried doing this but it didnt seem to work and i couldnt figure out if this is supported or not but it would definitely be a good thing

Trade Offs

I think there are a few constraints listed in the articles I have linked below which are worth reading so you are aware of limitations. I think possibly one of the main things to also be aware of is that the query of Cosmos is the current state (with the possible latency of the sync internally in Cosmos to the analytical store) but it will be very close to real-time. There isnt any concept of historical data or anything like that which people are sometimes looking for in Synapse so in those cases you probably want to import your view into a table in Synapse so you can see how the data changes over time or you may choose to do a different approach maybe. I think this is more about the current state.

Final Thoughts

I really like the simplicity of setting this up and the quick time to value. It also looks like it is a pretty robust and scalable way to go for these use cases. With it being pretty newish there are a few features and constraints to be aware of on the FAQ page but I really like this feature.

Other useful sources

On the way there were a few articles I found helpful in helping me get my proof of concept up and running. They include:

- https://docs.microsoft.com/en-us/learn/modules/query-azure-cosmos-db-with-sql-serverless-for-azure-synapse-analytics/4-perform-complex-queries

- https://www.sqlservercentral.com/articles/using-azure-synapse-link-for-azure-cosmos-db