If you are a logic apps user then you know performance is sometimes a challenge and in particular how do you cache the responses from logic apps which you will call repeatedly and get the same data from? There are a few different ways you can do this but I wanted to share how I am doing it with Azure API Management with an approach inspired by some of the goodness buried away in the BizTalk Migration Toolkit which could do with talking more about.

These days I am working mainly with new Azure iPaaS customers and am not that interested in the Migration Toolkit at the moment from the perspective of migrating BizTalk to Azure, but I am pretty interested in the patterns being recommended from the perspective of what Microsoft think an Azure integration solution could look like. With that in mind I have been looking at the internals of what is generated by the toolkit and I do like a few of the things being done with Azure APIM in the target state solution. In particular one approach which is quite cool is the way they retrieve the call back url for a logic app at runtime by calling the management API to get the url. Id assume that this is what the Logic App connector does under the hood anyway but using the same approach will allow me to look at a cache approach for my logic apps.

If you want to find out more about how the tool kit does it then look for getlogicappcallbackurl in the below linked json file.

https://github.com/Azure/aimazure/blob/main/src/messagebus/routingmanager/routingmanager.apim.json

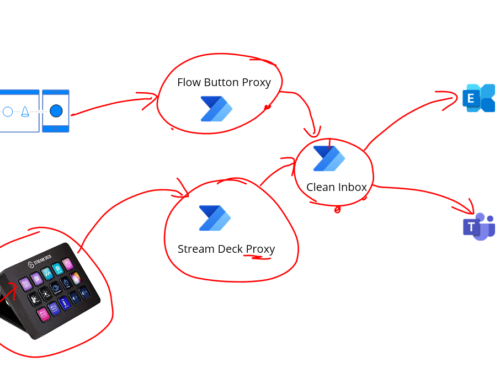

I prefer to build APIM solutions with Terraform so I wanted to build a Logic App helper API with 3 operations:

- Get Call Back url – will allow you to call it and get the url to call a logic app

- Run Logic App – will allow you to pass the name for a logic app and have the API call that logic app for you. This is handy in cases where you want to avoid the dependency between logic apps in your ARM templates

- Run Logic App with Cache – This will run the logic app but allow you to supply a cache key and cache timeout so you can get a response from the cache if one is there to reduce your latency.

The below video will walk you through the approach: