This is a cross post from my article in my integration playbook

If you want to use Logic Apps to integrate with Azure Gen2 Data Lake then unfortunately there isn’t an out of the box connector to do this. There is however a REST API which you can use. Following on from the series of articles about the helper Logic Apps, I wanted to talk about the Helper Logic Apps we have for integrating with Gen2 Data Lake.

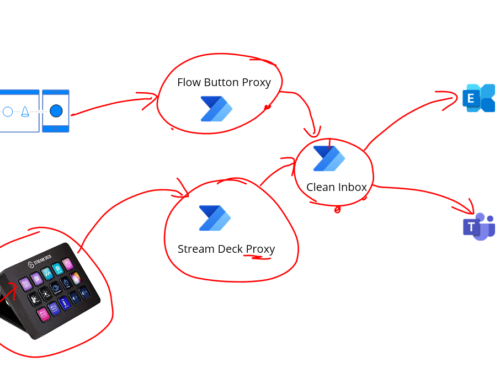

The below diagram shows how we have a helper solution where other Logic Apps can use our Data Lake Helper Logic Apps to save messages and retrieve messages from the Data Lake.

There are 2 main operations we need to support the use cases I have described in previous articles about how to use Data Lake as a message store for archive purposes or to store messages while notifications are passed to other interfaces to process a message.

We need the following 2 operations:

- Save File

- Get File

Save File

In order to save a file there are the following steps:

- Authenticate

- Create a file in the data lake

- Append data to the file

- Flush the file to save it

The top level of the helper Logic App is below:

The input will take a message with the below json schema

This will allow the consumer an easy generated interface to submit data to be saved. There are 3 properties to supply, the content type, the path to the file and the content.

In the helper we will encapsulate the root url for the data lake in the storage account but the path in the hierarchical namespace will be supplied by the consumer who wants to save a file.

I have also implemented a helper to do the authentication so we can get an access token we can reuse on multiple calls which will be more efficient than doing the authentication on each HTTP step. Ill talk about that later.

The first step after the authentication is to create the file which you can see below. We craft the url by adding the root url from the data lake (format is https://[StorageAccountName].dfs.core.windows.net) and the path to the file and then in the query string we use the resource=file.

We also set the token from the authentication helper as a bearer token.

Next up we need to append the content to the file we have just created. To do this we make another HTTP call but this time we use the append action. We also need to set the contact length which we did with the length expression and set this in a variable at the start of the Logic App.

The above will append the data to the file and finally we need to flush it to have it saved. To do this we make a 3rd HTTP call as shown below.

This time we use the flush action but we also need to specify the position we need to flush to as shown above.

At this point you now have a logic app where you can save a message as a file to the datalake.

Get File

In the Get File logic app we need to again have a token to use. We could probably specify the service principal on the HTTP action, but to keep the 2 Logic Apps the same so they are easier for the team to see consistent approaches, we get the token from the helper. This also allows us to potentially do some caching in the helper if we want.

The input to the Logic App will be a json with the path of the file to retrieve.

To get the file we will use the HTTP action and do a GET request on the Data Lake. The below picture shows an example of the GET request.

We then simply return the body of the response from the HTTP action as the body of the response from the Logic App.

This gives us a nice easy helper Logic App to retrieve messages from a datalake.

Authenticate Helper

In the authenticate helper we have a Logic App which will be triggered by GetFile and SaveFile and it will call Azure AD to get a token which will allow access to the Data Lake via the REST API.

The picture below shows the high level view of the Logic App where we retrieve an HTTP request, make an HTTP call to Azure AD, Parse the json response and return the Access Token.

The HTTP action to authenticate will request a token using the configuration below.

You will need to have setup a Service Principal in Azure AD and given it the right permissions on the Azure Storage account to be able to access the data lake. There is a really good walk through of this by Moim Hossain worth checking out here:

https://www.integration-playbook.io/docs/data-lake-gen-2-helper-logic-app

At a high level you need to ensure you have done the following:

- Create service principal

- Set the service principal to user impersonate on the Azure Storage API

- Give your service principal the Storage Blob Data Contributor role on your Storage Account IAM tab

- Setup any access permissions on folders within the data lake you want your service principal to be able to access

When you have got the response from the HTTP action you can parse it with the below json schema and get the access token.

You then simply return the access token.

Using the Helpers

Now you have the Data Lake helpers in place its simple to save and get messages from Data Lake.

To Get a message from Data Lake we can use the helper like in the below picture where we simply provide the path to the file we want.

To save a message to the Data Lake we can simply use the helper Logic App like below

We just need to pass the path to save the file, the content and the content type.

Now we can save a message to data lake, pass a pointer to that message to other interfaces who can then retrieve the file if needed.

Points to Note

- X-ms-version header

In the HTTP calls to Data Lake dont forget the API version header as shown above in the examples

- Content Length for XML

I found that when we had xml messages I would sometimes get errors that the length hadnt been calculated correctly for the flush and you would get the below error message

The uploaded data is not contiguous or the position query parameter value is not equal to the length of the file after appending the uploaded data.

I found the easiest way to avoid this issue was to encode the xml using the base64() expression in the Logic App before calling the SaveFile helper Logic App and then to decode it with the base64ToString() expression when you had retrieved the message from the GetFile helper Logic App.

- Folder permissions

Dont forget with the Data Lake one of the benefits is the fine grained access control within the heirachical namespace. First off remember to set the right permissions for your service principal, but also this means you can have different folders for different things. For example you might have a root folder for integration related messages which your helper Logic Apps have access to inc sub folders and you may have other root folders for things which dont relate to your interfaces.

- Blob Lifecycle Management in Data Lake

In the storage account Gen 2 Data Lake section there is a menu option for the Lifecycle Management of items in the Data Lake. This will allow you to configure rules to archive off to cool storage or delete blobs if they have been unused for a period of time. This means you can potentially pass around messages between interfaces and then have then available for a year and then delete them, or delete messages in 1 folder after 30 days and another folder you never delete them. You have a number of options here depending on your needs which can help you avoid holding long term data and minimize unnecessary cost.