Recently I had a requirement to extend one of our BizTalk solutions, this solution was around user synchronisation. We have 2 interfaces for user synchronisation, one supporting applications which require a batch interface and one which supports messaging. In this case the requirement was to send a daily batch file of all users to a partner so they could setup access control for our users to their system. This is quite a common scenario when you use a vendors application which doesn’t support federation.

In this instance I was initially under the impression that we would need to send the batch as a file over SFTP but once I engaged with the vendor I found we needed to upload the file to a bucket on AWS S3. This then gave me a few choices.

- Logic Apps Bridge

- Use 3rd Party Adapter

- Look ad doing HTTP with WCF Web Http Adapter

- Azure Function Bridge

Knowing that there is no out of the box BizTalk adapter, my first thought was to use a Logic App and an AWS connector. This would allow the logic app to sit between BizTalk and the S3 bucket and to use the connector to save the file. To my surprise at time of writing there aren’t really any connectors for most of the AWS services. That’s a shame as with the per-use cost model for Logic Apps this would have been a perfect better together use case.

My 2nd option was to consider a 3rd party BizTalk adapter or a community one. I know there are a few different choices out there but in this particular project we only had a few days to implement the solution from when we first got it. Its supposed to be a quick win but unfortunately in IT today the biggest blocker I find on any project is IT procurement. You can be as agile as you like, but the minute you need to buy something new things grind to a halt. I expected buying an adapter would take weeks for our organisation (being optimistic) and then there is the money we will spend discussing and managing the procurement, then there would be the deployment across all environments etc. S3 is not a strategic thing for us, it’s a one off for this interface so we ruled out this option.

My 3rd considered option was remembering Kent Weare’s old blog post http://kentweare.blogspot.co.uk/2013/12/biztalk-2013integration-with-amazon-s3.html where he looked at using the Web Http adapter to send messages over HTTP to the S3 bucket. While this works really well there is quite a bit of plumbing work you need to do to deal with the security side of the S3 integration. Looking at Kents article I would need to write a pipeline component which would configure a number of context properties to set the right headers and stuff. If we were going to be sending much bigger files and making a heavier bet on S3 I would probably have used this approach but we are going to be sending 1 small file per day so I don’t fancy spending all day writing and testing a custom pipeline component, id like something simpler.

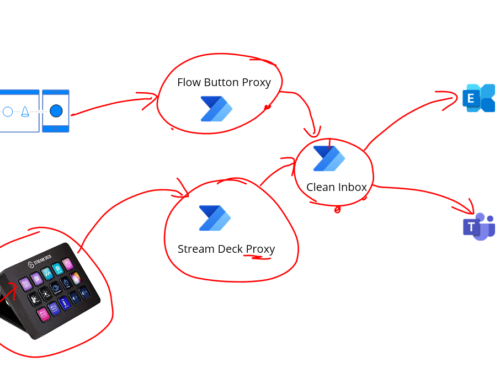

Remembering my BizTalk + Functions article from the other day I thought about using an Azure Function which would receive the message over HTTP and then internally it would use the AWS SDK to save the message to S3. I would then call this from BizTalk using only out of the box functionality. This makes the BizTalk to function interaction very simple. The AWS save also becomes very simple too because the SDK takes care of all of the hard work.

The below diagram shows what this looks like:

To implement this solution I took the following steps:

Step 1

I created an Azure Function and added a file called project.json file in the Azure function I modified the json to import the AWS SDK like in the below picture:

Step 2

In the function I imported the Amazon namespaces

Step 3

In the function I added the below code to read the HTTP request and to save it to S3

Note that I have a helper class called FunctionSettings which contains some settings like the key and bucket name etc. I am not showing these on the post but you can use the various options for managing config with a function depending upon your preference.

At this point I could now test my function and ensuring my settings are correct it should create a file in S3 when you use the test function option in the Azure Portal.

Step 4

In BizTalk I now added a one way Web Http send port using the address for my function

The function address url I can get from the Azure Portal and provides the location to execute my function over HTTP. In the adapter properties I have specified to use the POST http method.

In the message tab for the adapter I have chosen to use the x-functions-key header and have set a key which I generated in the Azure Portal so BizTalk has a dedicated key for calling the function.

Note you may also need to modify some of the WCF time out and message size parameters.

Step 5

Next I can configure my send port to use the flat file assembler and a map so my canonical message of users is transformed to the appropriate csv flat file format for the B2B partner. At runtime the canonical message is sent to the send port and converted to csv. The csv message is then sent over http to the function and the function will save it to the S3 bucket.

Conclusion

In this particular case I had a simple quick win interface which we wanted to develop but unfortunately one of the requirements we couldn’t handle out of the box with the adapter set we had (S3). Because the business value of the interface wasn’t that high and we wanted this done quickly and cheaply this was a great opportunity to take advantage of the ability to extend BizTalk by calling an Azure Function. In this particular solution I was able to develop the entire solution in a couple of hours and get this into our test environments. The Azure Function was the perfect way to allow us to just get it done and get it shipped so we can focus on more important things.

While some of the other options may have been a prettier architecture or buy rather than build the beauty of the function was that its barely 10 lines of code and its easy to move to one of the other options later if we need to invest more in integration with S3.