In todays organisations there is a massive theme that API’s and iPaaS are the keys to building modern applications because these technologies simplify the integration experience. While this is true I think one of the things that is regularly forgotten is the power of a well thought out messaging element within your architecture. When building solutions with API’s and iPaaS we are often implementing patterns where an action in one application will result in an RPC call to another application to get some data or do something. This does not cover the scenarios where applications need a relatively up to date synchronised copy of certain data so that various actions and transactions can be performed.

While data can be moved around behind the scenes with these technologies I believe there is a big opportunity in looking at evolving your architecture to make your key data entities available on service bus in a messaging solution so you can plug and play applications into this rather than having to rebuild entire application to application interfaces each time.

When working on one project last year this was one of the solutions we implemented and it has been really interesting to see the customer reap the benefits and repeat the pattern over and over.

The initial project we were working on was about implementing Dynamics CRM as a system of engagement in a higher education establishment to engage with students and to provide a great support experience for students. For pretty much any IT project in an education setting there are certain data entities you probably need your application to be able to access:

- Students

- Staff

- Courses

- Course Modules

- Course Timetable

- Which student is on which course

- Marks and Grades

In the project initial discussions talked about using SSIS and some CRM add-ons for SSIS to do ETL style integration with Dynamics CRM to send it the latest staff, student and course information on a daily basis. While this would work, there is little strategic value in this because the next project is likely to come along and implement the same interfaces again and again and before you know it all systems have point to point integrations passing this data around.

Our preferred approach however was to use Azure Service Bus as an intermediary and to publish data from the system of record for each key data entity to Service Bus then we could subscribe to this data and send it to CRM. In the next project another subscriber could subscribe to data on service bus and take what it needs.

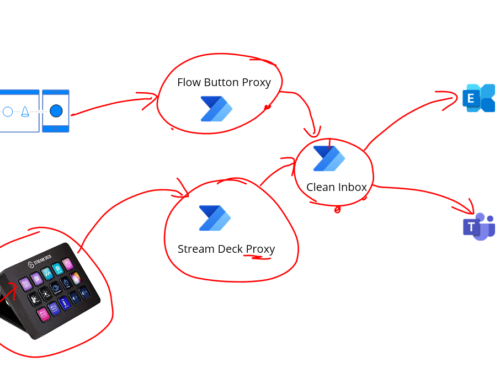

The below diagram shows the initial concept for our project.

Rather than try to solve all possible problems in day one we chose to use this approach as a pattern but to implement it in a just in time fashion alongside our main business project so that eventually we would make all key data entities available on service bus.

One of the key things we thought about was the idea of sending to topics and receiving from queues. This is really the best practice way to use service bus in my opinion anyway. Think of a topic as a virtual queue and we had 1 topic per type of entity.

We also extended this so that for each entity we would have 1 topic for all instances of the entity and one topic just for changes. We had 2 types of system of record for our main data entities, some applications would be able to publish events in a near real time fashion (for example CRM would be capable of doing this for any data entities it was determined to be the master system for) and other applications were only able to have information published if an Integration technology pulled out data and published it. Any system publishing events would be using a delta topic and any system publishing in “batches” would use a topic for delta changes and a topic for all elements. To elaborate on this with an example, imagine the student records system has a SQL table of students. We might have a process with BizTalk that took all students from the table and published them to the “all” topic. We would then have another BizTalk process which would use a last modified field on the table and publish just the records that had changed to the “delta” topic. This means that receiving applications could take messages from either topic depending on the scenarios:

- They always want all records

- They want all records the first time and then after initial sync they only want changes

- The application only ever wants changes

This approach would give us a really rich hub of data and changes which applications could take what they needed. In the diagram below you can see how we created a worker queue for CRM to be fed messages and we had many more topics which applications were publishing data to. Using Azure Service Bus we can define subscriptions to create rules on which applications want which messages. We then use the Forward To property to forward messages from the subscription to the worker queue for CRM.

Just a quick point to note, we never receive messages directly from a subscription, we always use forward to and send the messages to a queue. The reason for this is:

- You cant define fine granular security for a topic

- Forward To allows the worker queue to process many different kinds of messages

- Its easier to see which applications are receiving which messages

Once we had done our initial project where we had the core data entities available on Service Bus when the next project came along and they wanted staff information then we could simply add a subscription and routing to forward staff messages to a queue for this new application. The below diagram shows how a staff appraisal application was easily able to be fed staff information without having to care about the staff system of record. That problem had already been solved.

If you notice the staff appraisal application was getting all staff information every time it was published, when the project got to a more stable point we modified the routing rules so that the staff appraisal application was only sent staff records which are changing. To do this we just modified the subscription rules like in the diagram below:

In the next project we had a similar scenario where this time the virtual learning environment needed course data. This data was already available in service bus so we could create subscriptions and start sending this information with a significant reduction in the effort required. The below diagram illustrates the extension to the architecture:

After a number of iterations of our platform with various projects adding more key data entities to the platform we now have most of the key organisational data available in a plug and play architecture. While this may sound a bit like the ESB pattern to some, the key thing to remember is that the challenges of ESB were that they combined messaging alongside logical processing and business process to make things very complex. In Service Bus the focus is purely on messaging, this keeps things very simple and allows you to build the architecture up with the right technologies for each application scenario. For example in one scenario I might have a Logic App which processes a queue and sends messages to Salesforce. We might have a BizTalk Server which converts a message to HL7 and sends it to a healthcare app or I might do things in .net code with a Web Job. I have lots of choices on how to process the messages. The only real coupling in this architecture is the coupling of an application to a queue and some message formats.

There are still some challenges you may come across in this architecture, mainly it will be in cases where an application needs more information than the core message provides. Lots of applications have slightly different views on data entities and different attributes. One of the possibilities is to use something like a Logic App so that when you process a message you may make an API call and look up additional data to help with message processing. This would be a typical enrichment pattern.

The key thing I wanted to get across in this article is that in a time where we are being told API and iPaaS are simplifying integration, messaging as an architecture pattern still has a very valuable and effective place and if done well can really empower your business rather than building the same interface over and over again but in slightly different forms.