Recently I have been thinking about a sample use case where you may want to expose legacy applications via a hybrid API but you have the challenge that the underlying LOB applications are not really fit for purpose to be the underlying sub-systems for a hybrid API. In this case we have some information held across 2 databases which are years old and clogged full of crappy technical debt which has festered over the years. Creating an API with a strong dependency on these would be like building on quick sand.

At the same time the organisation has a desire at some point (unknown timescale) to transition from the legacy applications to something new but we do not know what that will be yet.

I love these kinds of architecture challenges and it emphasises the point that there isn’t really such a thing as a target architecture because things always change so you need to think of architecture as always in transition and as a journey. If we are going to do something now we should add some thinking about what we might do later to make sure the journey is smooth.

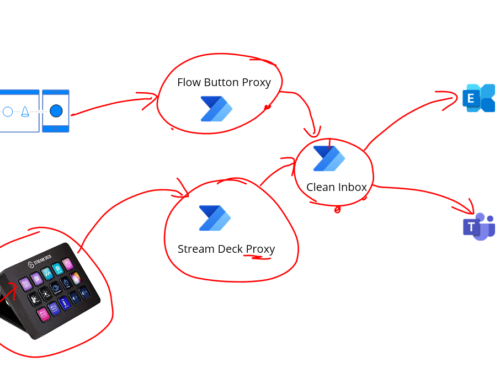

Expected Architecture

Below is a diagram of one of a number of ways you might expect to implement the typical hybrid API architecture using the Microsoft technology stack today. This would be one of the typical candidate architectures for consideration for this pattern in normal circumstances.

The problem with this architecture is mainly the dependency on the LOB systems which mean we inherit some very risky stuff for our API. For example if we suddenly had a large number of users on the API (or even a small number) you could easily see these legacy systems creaking.

Proposed Architecture

What I was considering instead was the idea that if we can define a canonical data definition for the data which we know doesn’t change very often then we can sync the data to the cloud and the API would use that cloud data to drive the API. This means our API would be very robust and scalable. At the same time it also means we take all of the load away from the LOB applications so we do not risk breaking them.

Once the data is in a nice json friendly format in the cloud (compare json and find diff online here if you need to), this means we have distinct layer of separation between the API and underlying LOB applications. For our future transition to replace the LOB applications this means we just need to keep the JSON files up to date to support the API which should be relatively simple to do.

At present I think BizTalk is our best tool for bending the LOB data to the JSON formats as it does dirty LOB integration very well and can be scheduled to run when required. In the future we may or may not use BizTalk to do the sync process, that’s a decision we will make at the time.

API Management to Blob Storage

Following on from my previous post, by using APIM and a well structured data model using the files in blob storage it became very easy to expose the files in blob storage as the API with zero code. We could simply use the url template rewrite features to convert the API proxy friendly url for the client to the the back end Azure Blob Storage url.

The next result is our API would scale massively without too much trouble, have zero code, can use all of the APIM features and give a great way for client applications to search the course library without us worrying about the underlying LOB applications

The below video will demonstrate a quick walk through of this.